Professionally, I am very interested in developer tools, especially how to develop them in a proper way. One kind of developer tools are those that let developers analyse the code to extract some statistics or other characteristics about it. This is called

static analysis because the information about the code can be obtained at compile-time, when the code is not even running yet.

Today, I'd like to write about three tools in this area that I'm interested in.

1. SemmleCode

Released by

Semmle as a free product, this

Eclipse plug-in allows to write and execute queries against the source code base using the .QL query language. The

.QL language is a specially developed SQL/LINQ-like query language an interesting property of which is extensibility and object orientation. An example:

from Field f

where f.hasModifier("public")

and

not f.hasModifier("final")

select f.getDeclaringType().getPackage(),

f.getDeclaringType(),

f

This query returns all public non-final fields, and for each field it also returns the type and package where the field is defined.

How can SemmleCode be useful? The website gives six mainline usage scenarios:

- Search and Navigate code

- Find bugs

- Compute metrics

- Enforce coding conventions

- Generate charts and graphs

- Share your queries

How does SemmleCode work? First, it walks the entire source code and parses it into an intermediate representation. Eclipse is kind enough to provide tools with a Java parser and full access to the AST, so Semmle folks didn't have to write their own Java parser. Note how great it is, when the IDE takes so much care about its tools and lets them warmly become part of the IDE family.

Anyway, then SemmleCode dumps the AST into a relational database, whereas only class and member information is being stored. Currently they don't go down to the statement level and mostly do inter-procedural analysis (not intra-procedural). However, method calls still land in the DB, which is a good thing.

When you execute your query it's being internally rewritten in Datalog, a dialect of Prolog. Prolog is a terrific eye-opener and deserves a separate post in the future. Finally, Datalog is being converted to very efficient and highly optimised SQL, which is then run against the DB engine.

To sum up, Semmle emphasizes flexible arbitrary querying against the code model. This is a little bit different usage pattern if we compare it to checking against fixed and predefined rules, like for example

FxCop does. SemmleCode is more about discovery and analysis, while FxCop is more about automated quality control and checking.

That's about it. The tool is great, .QL is expressive, and Semmle is moving forward with promising regularity. Watch them at

QCon in San-Francisco later this year.

2. .NET tools

OK, Eclipse is good, but what about the rest of us, .NET folk? Well, first there is

NDepend, which I still haven't had a chance to look at (sorry

Patrick!) But it looks like a good tool, I should definitely give it a try in my spare time.

Then, there is

FxCop, the widely used one. FxCop contains a library of distilled developer experience formulated as rules. The code is checked against the rules and FxCop annoys developers until they either fix the code or finally lose their temper and just turn the offending rule off :) It is noteworthy that FxCop doesn't parse the source code - it goes in the reverse direction and analyses the compiled assemblies.

But today I'd like to specially write about

NStatic, which is a promising tool I'm really excited about.

Wesner Moise is the talented developer behind it, who applies AI and algebraic methods to code analysis. As of now, NStatic hasn't been released yet, but I'm closely watching Wesner's blog, which is a real wealth of insightful information. Beside that, Wesner seems to like the idea of structured editing, which also happens to be my own passion.

3. Sotograph

Last, but not least, another product which I'm interested in -

http://www.software-tomography.com. This tool emphasizes visualization of large systems and the metaphor behind comes from medicine. Just like tomography allows to peek into the human body to see what exactly is wrong, Sotograph allows to visualize large software systems to analyse dependencies and find architecture flaws.

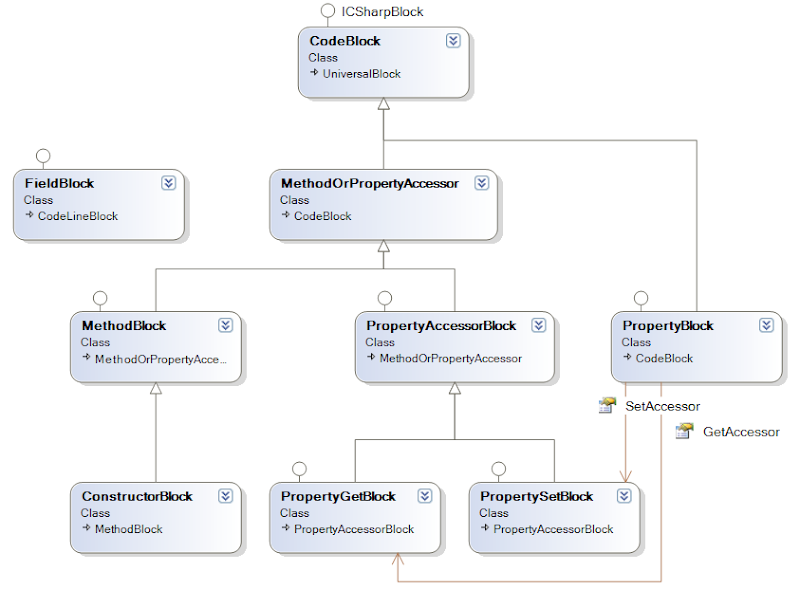

Software Tomography recently introduced a highly-efficient C# parser

specially developed at the

University of Linz, Austria - home of

Prof. Hanspeter Mössenböck, the creator of

Coco/R, a .NET parser generator. This is also a good topic for a separate post.

One possible usage scenario for such tools could be determining dependencies between subsystems, for example, when planning a large refactoring or other massive code changes. Static analysis tools allow us to peek into the future and see what dependencies are going to be broken if I do this and that. We can also conduct targeted search using source code querying. Whatever we do - we do it, in the end, to increase code quality and plan for future maintenance and scalability.

Update: see also my del.icio.us links about static analysis:

http://del.icio.us/KirillOsenkov/StaticAnalysis